Artificial intelligence is transforming business operations, bringing new levels of productivity, deeper insights, and faster decision-making. As AI becomes an essential part of daily workflows, keeping sensitive data safe is more important than ever. Protecting this information is not just a technical necessity, but also an ethical responsibility. This article explores two key pillars: ensuring the privacy of company data and putting the right access controls in place for AI-powered features.

1. Ensuring the Privacy of Company Data

AI systems rely on large amounts of data to deliver real value. This can include accounting records, customer lists, employee details, financial information, and confidential business strategies. That’s why keeping your data private and secure is absolutely crucial.

Why does privacy matter?

Breaches of sensitive data can seriously damage a company’s reputation, erode customer trust, and lead to legal or regulatory trouble. In 2023, the average cost of a data breach reached 4.45 million dollars according to IBM Security. This number increased further in 2024, when the average cost of a breach jumped to 4.88 million dollars a 10 percent rise year-over-year. Laws such as the GDPR in Europe and the CCPA in California demand strong data protection measures.

Emerging risks in the AI era

AI systems, unlike traditional software, actually learn from the data they process, sometimes in ways that are difficult to predict. For example, an AI assistant might analyze emails, internal reports, or HR files to help automate tasks. Without proper management and oversight, there is a risk of exposing confidential information. Large language models, such as chatbots or writing assistants, may retain and sometimes accidentally reveal information they have previously seen.

Updated Insights into AI-Related Data Breaches

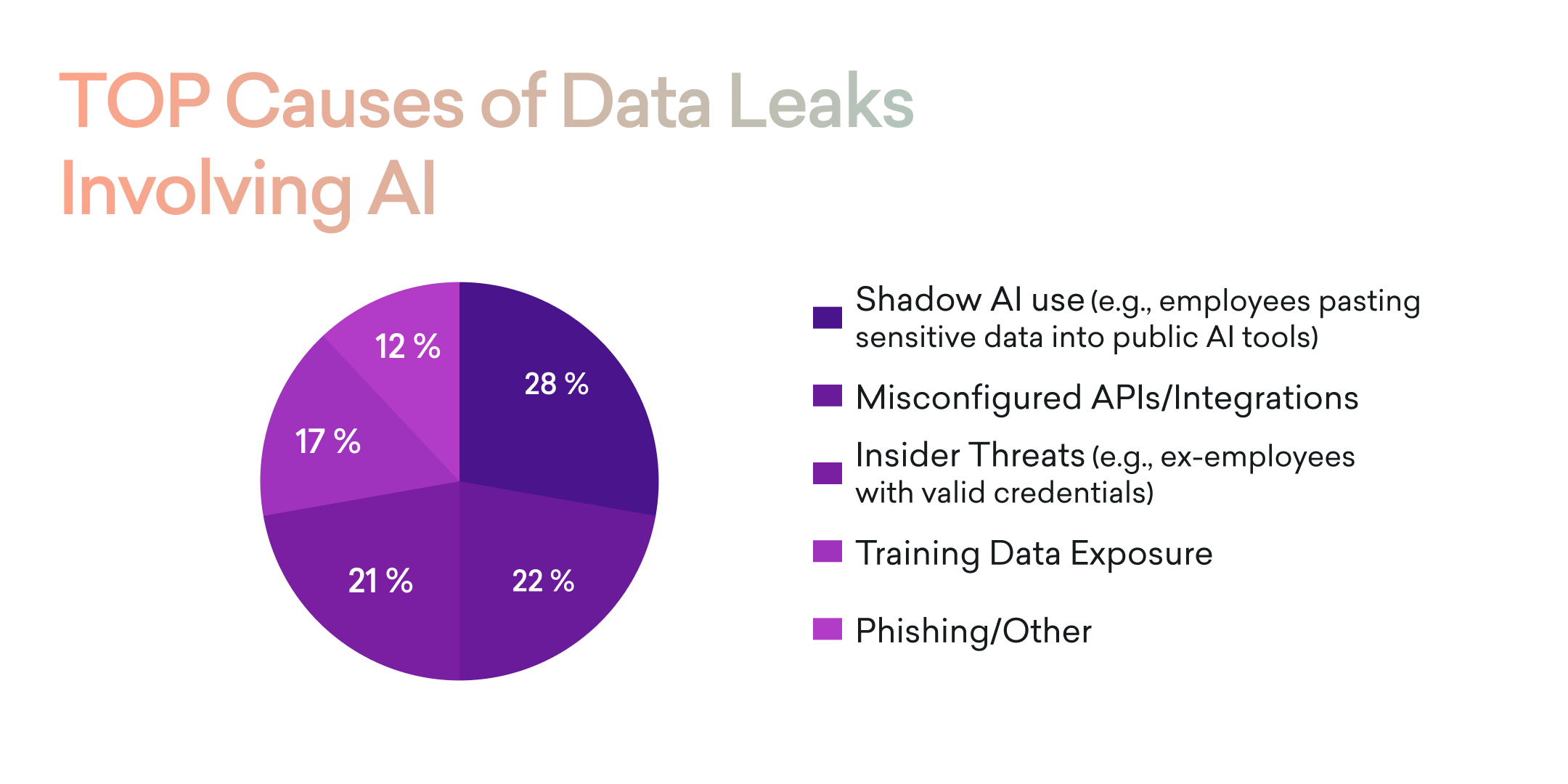

Recent studies highlight the evolving nature of AI-related data breaches:

- Shadow AI and Unapproved Usage: In 2023, shadow AI when employees paste confidential data into public AI tools accounted for 28 percent of incidents (IBM, Gartner). By 2024, this trend intensified, with 38 percent of employees admitting they had submitted sensitive work data to AI tools without company authorization.

- Misconfigured APIs and Integrations: In 2023, misconfigured APIs or third-party integrations caused 22 percent of data leaks.

- Insider Threats: Insider risks, such as former employees retaining access, accounted for 21 percent of leaks in 2023.

- Data Exposure During Vendor Model Training: In 2023, this accounted for 17 percent of breaches.

- Phishing and Social Engineering: In 2023, phishing and social engineering were behind 12 percent of cases. By 2024, credential theft became an even bigger threat. There was a 703 percent increase in credential phishing attacks in the second half of 2024, with 82.6 percent of phishing emails leveraging AI technology.

- AI Tool Vulnerabilities: A recent study in 2024 revealed that 84 percent of AI tools had experienced data breaches, and 51 percent had faced credential theft incidents.

Best practices for secure AI integration

To minimize risks and stay compliant in the age of artificial intelligence, companies should focus on a few essential practices:

- Data Minimization: Collect and process only the data that is truly necessary for your AI systems to function.

- Anonymization: Remove or mask personal and sensitive details before entering data into AI systems.

- Regular Audits: Continuously monitor data flows and review who has access to what information.

- Clear Policies: Educate employees on what is considered sensitive, establish clear guidelines for responsible use of AI tools, and label sensitive documents.

By staying proactive and following these principles, organizations can make the most of artificial intelligence while keeping sensitive data protected from growing threats. Guidance from frameworks such as the NIST AI Risk Management Framework can also help companies manage AI risks and make data security a top priority at every level of leadership.

2. Managing Access to AI-Powered Features

As AI becomes embedded in daily operations, determining who can access specific AI functionalities is crucial. For example, an AI assistant capable of automating HR tasks, like employee offboarding, should not be accessible to all employees.

Understanding "Agentic Permission"

This concept involves setting clear boundaries on who or what has the authority to perform certain actions within an AI system. It's especially vital for functions that carry legal, financial, or reputational risks.

Importance of robust access controls

Implementing stringent access controls helps prevent mistakes and intentional misuse. It also facilitates accountability and compliance with legal standards.

Strategies for effective permission management

- Role-Based Access: Assign AI functionalities based on job roles, ensuring only authorized personnel can execute specific tasks.

- Multi-Level Approval: For high-stakes actions, set up a process that requires confirmation from several authorized individuals. This approach ensures that important decisions are thoroughly reviewed and validated by people using Human-in-the-Loop mechanisms, especially when the action is suggested by AI.

- Detailed Logging: Maintain comprehensive records of who accesses sensitive AI features and when.

- Employee Training: Educate staff about the responsibilities and limitations associated with AI tools.

- Human-in-the-Loop (HITL): Incorporate human oversight in AI decision-making processes, especially for critical tasks. HITL ensures that AI outputs are reviewed and approved by humans, reducing the risk of errors and enhancing accountability. For instance, Google Cloud emphasizes the importance of HITL in maintaining control over AI-driven processes.

.png)

Cultivating a Security-Conscious Culture

Technology alone isn't sufficient to ensure data security. A company culture that prioritizes security, encourages open communication, and promotes continuous learning is essential. Employees should feel empowered to question and understand AI tools, ensuring responsible usage.

Quick Security Audit for Leadership

- Are all AI tools in use within the company documented?

- Is there an up-to-date data classification guide?

- Is there clarity on who holds responsibility for AI-related risks at the executive level?

- Are offboarding processes automated and regularly audited?

- Are role-based permissions for AI tools tested and enforced?

- Are secure environments used for experimenting with new AI models?

- Can the company produce logs of AI inputs and outputs if required by regulators?

Answering "no" or "unsure" to any of these questions indicates areas needing immediate attention.

Conclusion: Balancing Innovation and Security

While AI offers transformative benefits, it also introduces new challenges in data security. Organizations must strike a balance between leveraging AI's capabilities and implementing robust safeguards. By combining technological solutions with clear policies and a culture of security awareness, companies can harness AI's potential without compromising sensitive data.

Sources and Further Reading:

IBM Security: Cost of a Data Breach Report 2024

NordLayer: Biggest Data Breaches of 2024

Tech Advisors: AI Cyber Attack Statistics 2025

Thunderbit: Key AI Data Privacy Statistics to Know in 2025

Cybernews: 84% of AI Tools Leaked Data, New Research Shows

Google Cloud: Human-in-the-Loop (HITL) Overview

IBM Security: Cost of a Data Breach Report 2023

Gartner: The Top Security Risks of Generative AI

Ponemon Institute: Cost of Insider Risks Global Report 2023

NIST AI Risk Management Framework

NIST AI RMF Adoption Survey 2024

Gartner AI Governance and Risk Survey 2024

.avif)